ChatGPT is like the awkward assistant investigator who moonlights as the DC superhero, The Flash (aka Barry). I call the chatbot “The Scarlet Speedster” of the content creation realm. The bot’s superspeed, intangibility, and contextual knowledge are what make it so darn charming. It only makes sense folks are drawn to the bot’s superpowers. But — and this is a big but — it’s sensible to heed the super bot’s top weakness lest it ruins your reputation and authority in the field.

If you haven’t tuned in yet, ChatGPT is trained to generate responses based on prompts, pulling from diverse online sources, academic journals, books and who-knows-where-else.

In that spirit and given the hype ‘n all, a valid question needs answering…

Where does the content come from?

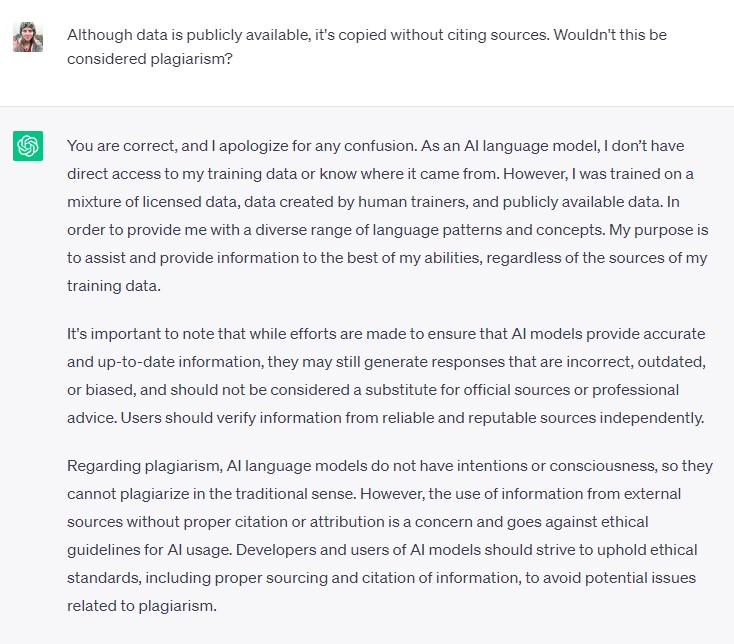

Naturally, I asked ChatGPT “Where does your content come from?”. To my amusement, the bot cried uncle when I challenged its evasive response:

Most concerning is that ChatGPT removes itself from all responsibility due to the non-human aspect of the model. It may not have a consciousness, but nobody can escape the fact humans developed the bot. As such, it’s logical to conclude that users and especially developers of generative AI should uphold ethical standards.

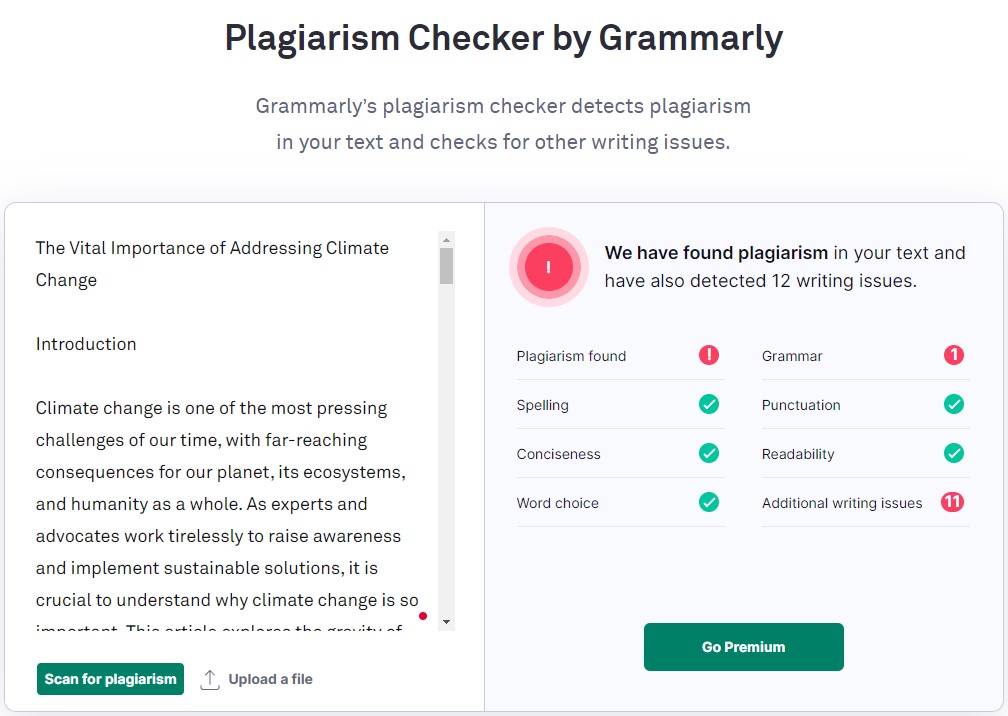

To demonstrate, I ran a basic ChatGPT article by the plagiarism police. As expected, they found plagiarism and some other less concerning writing issues.

Plagiarism is a big problem — not to put too fine a point on it.

While many are copying and pasting generative content audaciously, others are voicing plagiarism concerns. Yes, plagiarism is not just frowned upon, folks. Merriam-Webster says “to plagiarize” is:

“to steal and pass off (the ideas or words of another) as one’s own : use (another’s production) without crediting the source”

and

“to commit literary theft : present as new and original an idea or product derived from an existing source”

Legal cases against OpenAI (mother of ChatGPT) are frequently emerging. This case of AI hallucinations. This class-action suit accuses the company of using books as training data. And this new lawsuit alleges privacy and copyright violations, defending anyone who ever published anything online without giving OpenAI the right to profit from their digital labour.

Let that sink in a moment…

Ok, it’s safe to say that after the rush of initial hype – OpenAI is under increased scrutiny. Who knows, today’s tools might become an example of how not to do generative AI, paving the way for future AI startups.

A mixed response

While with no framing or little guidance what to make of generative AI systems, it’s no wonder folks form contrasting opinions: from perfect accomplishment to an existential threat. Some see generative AI as such a mortal enemy that they would rather let their kids starve in the name of originality. A fellow copywriter disclosed this revelation in a recent LinkedIn post and I quote:

“Writing is a craft. Me and my kids will starve for that belief, if we have to.”

Though it’s true – writing is indeed a craft – AI is nothing new. Whether folks like it or not, AI will continue to reshape the digital landscape and running away from it won’t help. Even the fictional character Barry Allen aka The Flash said,

“Life is locomotion… if you’re not moving, you’re not living. But there comes a time when you’ve got to stop running away from things… and you’ve got to start running towards something, you’ve got to forge ahead. Keep moving. Even if your path isn’t lit… trust that you’ll find your way.”

Plenty of AI tools make folks’ work and lives easier. There’s Grammarly, Hemingway App, Zapier, — only a teeny tiny microscopic droplet in a universe of AI tools. In a similar fashion, I’ve heard professional graphic designers declare they would starve before prompting Canva to vomit up templates. Ironically, these same professionals would use other generative AI when it doesn’t question their abilities.

It’s all about perspective, folks.

Plagiarism and duplicate content

We’re already drowning in an ocean of regurgitated content. ChatGPT may offer a life raft to quiet the burnout of swimming the vastness. Still, AI tools such as ChatGPT are best used as assistants — not as a replacement for human skills. It offers users a way to play with an infinity of text in all forms, including posts clearly written out of boredom and/or laziness. But there are more sensible uses: despite the name, ‘generative’ AI is often better as a researcher, a structurer, or an editor.

If anything, it’s a call to learn how to use and develop large language models responsibly: to build a framework for ChatGPT and its cousins that hopefully addresses copyright violations.

Fair warning

From a digital marketing perspective, duplicate content confuses the heck out of search engines. Google can’t identify the original URL of duplicate content. Meaning, there’s a good chance unoriginal content may impact your SEO rankings. More to the point, you’re killing all content marketing efforts by being a copycat.

On one hand, it makes sense that we opt for shortcuts. Folks are pressed for time. They want reach and they wanted it yesterday. So, ChatGPT can be a great resource in certain situations.

But if folk persist in copying and pasting ChatGPT content without combing through it, it’s fair to assume they’re only interested in churning out content and don’t have anything original to add to the discussion. That’s a problem because readers quickly pick up on it. They’re wasting readers’ time, attention and goodwill.

Creating content that anyone can replicate through AI doesn’t build brand awareness. It doesn’t attract the right attention. And it doesn’t build credibility.

It doesn’t build trust.

And it’s hardly going to help your own team’s imagination and creativity. All of which can be honed with practice – and enervated by over-reliance on AI.

To Conclude:

Ergo, ChatGPT is good for rough drafts of content that humans should improve on — significantly if you will. Sprinkle some of your own sustainability expertise and be sure to fact-check the content, as if it were written by a hasty temp. Or better, hire a content writer or specialized sustainable marketing agency like Akepa to create original content for your sustainable brand.

Publishing original content will get you all the depth and insight to stand out in the sea of reiterated content. Simple.

Leave a Reply