To avoid any risk of plagiarism or damage to your brand, how can you tell when an article that has ended up in your hands was written with AI?

Let’s look at how to examine the text for the signs of naughtiness yourself…

20 ways to detect AI content yourself

You don’t have to use tools or apps because AI writing is distinctive, even though the idea is to replicate human writing. By now, you may even possess an AI radar which starts to, ahem, resonate as you read something – almost instantly. While that’s an overall feeling, here are a few of the specific tell-tale signs to look for – and just like DSM-IV criteria for people, not all need to be present at once for a definite diagnosis.

Content:

- General: lacking depth, insight, a refreshing take or an offbeat view.

- Grammatical: fine grammatically, but without the signature touches you’d expect from a sentient writer.

- Inexperienced: raw AI content obviously lacks real personal experiences or examples.

- Formulaic: AI repeats certain phrases or structures, for example “It’s not x, it’s y”, “In today’s {fill-in-the-blank} world”, “here’s the kicker”, asking questions to link paragraphs, or finishing paragraphs with forced morals.

- Hallucinatory: AI is still capable of hallucinating imaginary information. Surprisingly high-profile fabrications have slipped through the net.

Tone:

- Safe: not venturing any controversial opinions – arguments are overbalanced and you could say conformist.

- Cringey: when prompted to write in a quirkier way, the writing goes too far and gets embarrassing.

- Sassy: further to the above, there’s a try-hard, conspicuous, sassiness to lots of AI writing – especially social updates.

- Jargon-y: AI can repeat certain jargon words like ‘delve’, ‘align’, ‘tapestry’ and ‘seamless’. It’s a bit David Brent.

- Overcooked: almost every line tries to sound momentous. This has the effect of making the whole message feel inauthentic.

Structure:

- Breathless: AI likes to write in short, clipped sentences that can be stressful to read. There is no natural, meandering ease to the rhythm.

- Double adjectives: rather than use one adjective, AI tends to use two or more, which is repetitive and annoying.

- Bullets: the machines believe that humans adore structure, like bullet points. But they can structure overzealously leading to articles that look like PowerPoint decks.

- Capitalised headers: for some reason, AI has a habit of capitalising the initial letters of all of the words in headers.

- Em dashes: it’s true that writers use these too but the extra-long em dashes get overused by AI and can be a corroborating sign. We’re just about to use one — so you can see what this longer dash looks like compared to the well-known hyphen.

Starts & Finishes:

- Deja vu: have you read this intro before, even though it’s plausible?.

- It isn’t just…: introductions often proclaim that something isn’t merely the small thing that we might believe it is, it’s actually far grander. For example, we keep reading that “sustainability isn’t just a buzzword anymore, it’s a… blah blah blah”.

- Lengthy: instead of getting straight to it, AI adds preliminary sections before arriving at the topic that the headline of the post advertises.

- Conclusions: articles typically end with a conclusion that is just titled mononymously, as ‘Conclusion’. Like an exam answer, sir.

- Platitudes: the conclusions written by AI are exaggerated, platitudinal footnotes that are not incisive on the issues discussed – often with a clunky link to the brand or service.

And it’s also about context: has someone sent you something marked by any of the above, unusually?

Bear in mind that these are points to find the worst AI content that has been barely edited. When AI and humans combine in a more thoughtful way, the results can be a bit better (and harder to spot).

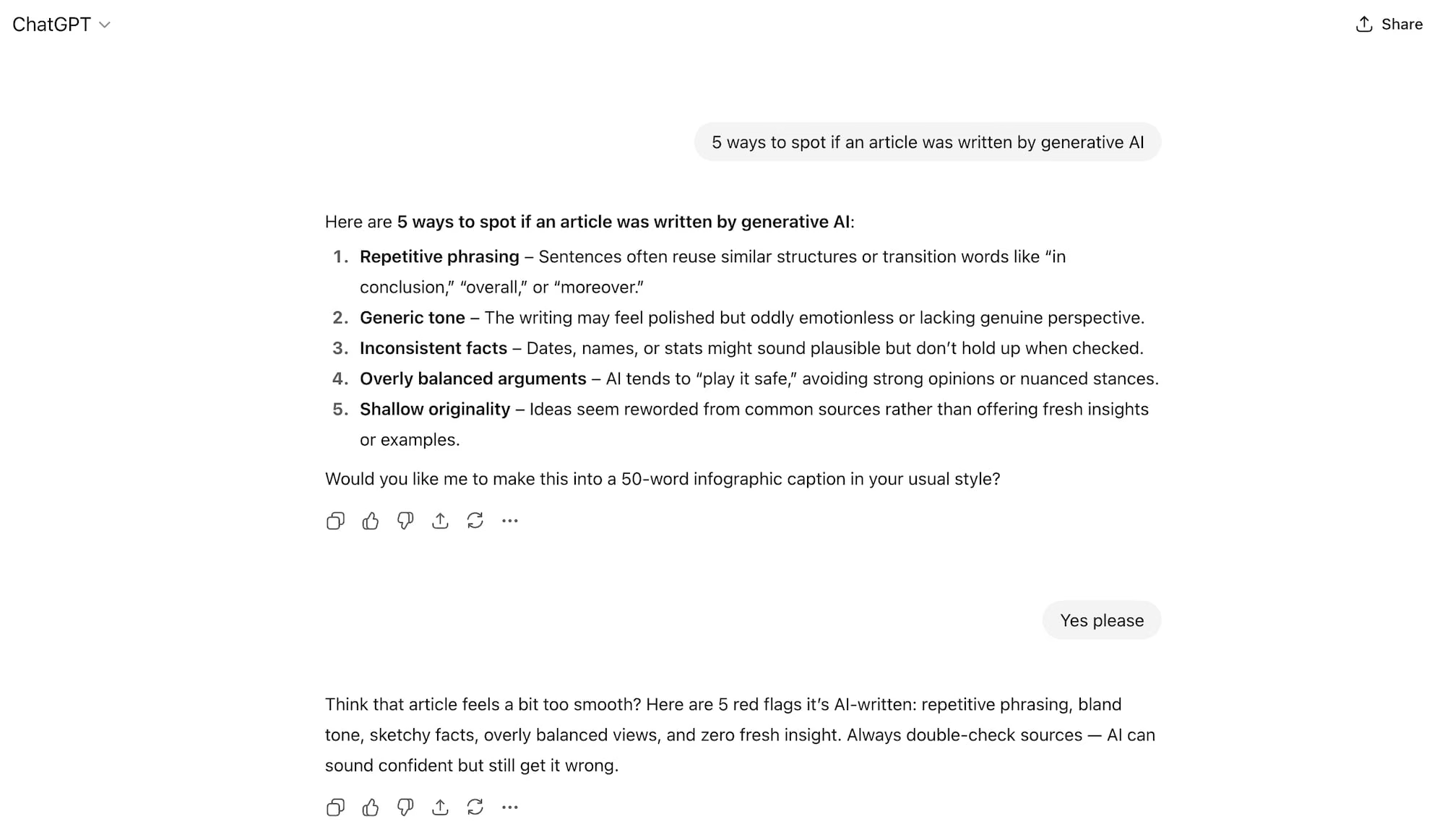

And now. To illustrate the differences between AI and human writing, here’s our friend Chat on how to spot if an article was written with generative AI. You’ll note some of the signs from the list above, like a dodgy em dash in the social update and some oily phrases like ‘nuanced stances’.

Apps to help, for the price of free

While you can check yourself, a more objective way is to use a free app, which may corroborate your suspicions.

There are many of these now, claiming detection of AI writing that’s foolproof. Most use a freemium model, where you can analyse a certain number of words for free but need to pay to go beyond that limit. Some are more skilful than others at spotting the AI presence.

The tricky bit is that if you’re looking for an entirely free tool, the ‘free’ allowance is set so low that you must upgrade to ‘premium’ to examine any substantial text – or a typical blog post that exceeds 1,000 words or so (like this one does at around 1,100 words).

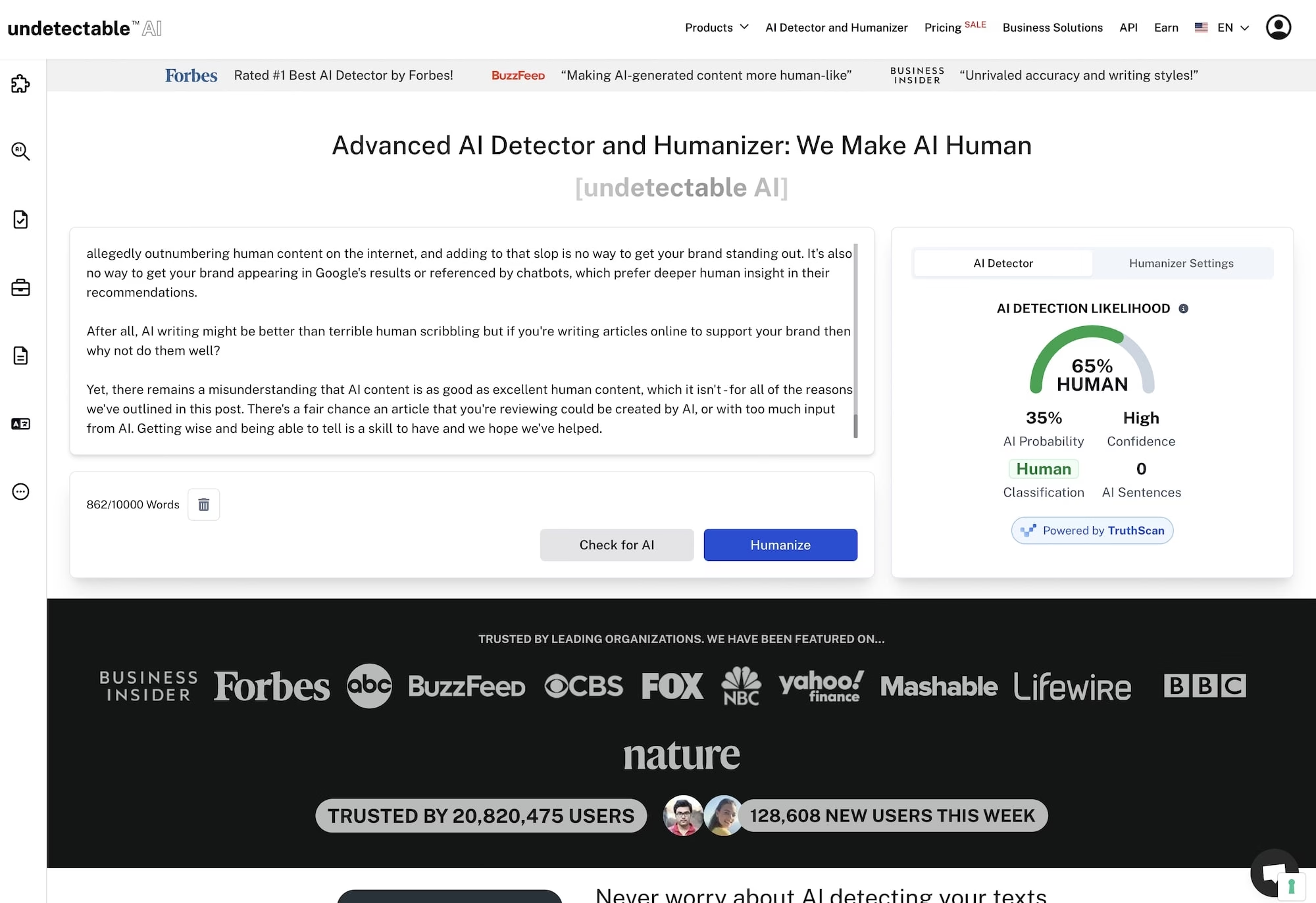

We don’t pay for any tools but we often use Undetectable AI because of its generous free limit of 10,000 words each time and you can use it as often as you like. Here’s how that looks when examining this blog post – which is entirely human. It’s pretty decent at spotting the AI but you’ll notice that despite the correct classification of ‘Human’, and zero AI sentences detected, the mere 65% likelihood demonstrates that even tools are losing some confidence when making a call.

If you’d like to find some other tools then a good ol’ Google search will help you find some more options. More and more are appearing and, just like generative AI itself, they’re getting better over time.

And why detect AI content, at all?

To wrap things up, and rather than write a trite conclusion like AI would, we’ll explain why you should do this. AI content is now allegedly outnumbering human content on the internet, and adding to that slop is no way to get your brand standing out. It’s also no way to get your brand appearing in Google’s results or referenced by chatbots, which prefer deeper human insight in their recommendations.

After all, AI writing might be better than terrible human writing but if you’re creating articles to support your brand then why not write them to make an impact? Whether you’re in B2C or B2B, you’re always talking to humans, so isn’t it better to create content that appeals to human readers, first? If not, it’s strange to write words that can be read.

Yet, there remains a misunderstanding – perhaps a diminishing misunderstanding – that AI content is as good as excellent human content, which it isn’t – for all of the reasons we’ve outlined in this post. With that view still widespread, there’s a fair chance an article that you’re reviewing could be created by AI, or with too much input from AI. Getting wise and being able to tell the difference is a skill. We hope we’ve helped to increase your dexterity.

Leave a Reply